A History of Climate Change Science and Denialism

The girl got up to speak before a crowd of global leaders. “Coming here today, I have no hidden agenda. I am fighting for my future. Losing my future is not like losing an election or a few points on the stock market. I am here to speak for all generations to come.” She continued: “I have dreamt of seeing the great herds of wild animals, jungles and rainforests full of birds and butterflies, but now I wonder if they will even exist for my children to see. Did you have to worry about these little things when you were my age? All this is happening before our eyes.” She challenged the adults in the room: “parents should be able to comfort their children by saying "everything's going to be alright', "we're doing the best we can" and "it's not the end of the world". But I don't think you can say that to us anymore.”

No, these were not Greta Thunberg’s words earlier this year. This appeal came from Severn Suzuki at the Rio Earth Summit back in 1992. In the 27 years since, we have produced more than half of all the greenhouse gas emissions in history.

Reading recent media reports, you could be forgiven for thinking that climate change is a sudden crisis. From the New York Times: “Climate Change Is Accelerating, Bringing World ‘Dangerously Close’ to Irreversible Change.” From the Financial Times: “Climate Change is Reaching a Tipping Point.” If the contents of these articles have surprised Americans, it reveals far more about the national discourse than then any new climate science. Scientists have understood the greenhouse effect since the 19th century. They have understood the potential for human-caused (anthropogenic) global warming for decades. Only the fog of denialism has obscured the long-held scientific consensus from the general public.

Who knew what when?

Joseph Fourier was Napoleon’s science adviser. In the early 19th century, he studied the nature of heat transfer and concluded that given the Earth’s distance from the sun, our planet should be far colder than it was. In an 1824 work, Fourier explained that the atmosphere must retain some of Earth’s heat. He speculated that human activities might also impact Earth’s temperature. Just over a decade later, Claude Pouillet theorized that water vapor and carbon dioxide (CO2) in the atmosphere trap infrared heat and warm the Earth. In 1859, the Irish physicist John Tyndall demonstrated empirically that certain molecules such as CO2 and methane absorb infrared radiation. More of these molecules meant more warming. Building on Tyndall’s work, Sweden’s Svante Arrhenius investigated the connection between atmospheric CO2 and the Earth’s climate. Arrhenius devised mathematical rules for the relationship. In doing so, he produced the first climate model. He also recognized that humans had the potential to change Earth’s climate, writing “the enormous combustion of coal by our industrial establishments suffices to increase the percentage of carbon dioxide in the air to a perceptible degree."

Later scientific work supported Arrhenius’ main conclusions and led to major advancements in climate science and forecasting. While Arrhenius’ findings were discussed and debated in the first half of the 20th century, global emissions rose. After WWII, emission growth accelerated and began to raise concerns in the scientific community. During the 1950s, American scientists made a series of troubling discoveries. Oceanographer Roger Reveille showed that the oceans had a limited capacity to absorb CO2. Furthermore, CO2 lingered in the atmosphere for far longer than expected, allowing it to accumulate over time. At the Mauna Loa observatory, Charles David Keeling conclusively showed that atmospheric CO2 concentrations were rising. Before John F. Kennedy took office, many scientists were already warning that current emissions trends had the potential to drastically alter the climate within decades. Reveille described the global emissions trajectory as an uncontrolled and unprecedented “large-scale geophysical experiment.”

In 1965, President Johnson received a report from his science advisory committee on climate change. The report’s introduction explained that “pollutants have altered on a global scale the carbon dioxide content of the air.” The scientists explained that they “can conclude with fair assurance that at the present time, fossil fuels are the only source of CO2 being added to the ocean-atmosphere-biosphere system.” The report then discussed the hazards posed by climate change including melting ice caps, rising sea levels, and ocean acidity. The conclusion from the available data was that by the year 2000, atmospheric CO2 would be 25% higher than pre-industrial levels, at 350 parts per million.

The report was accurate except for one detail. Humanity increased its emissions faster than expected and by 2000, CO2 concentrations were measured at 370 parts per million, nearly 33% above pre-industrial levels.

Policymakers in the Nixon Administration also took notice of the mounting scientific evidence. Adviser Daniel Patrick Moynihan wrote to Nixon that it was “pretty clearly agreed” that CO2 levels would rise by 25% by 2000. The long-term implications of this could be dire, with rising temperatures and rising sea levels, “goodbye New York. Goodbye Washington, for that matter,” Moynihan wrote. Nixon himself pushed NATO to study the impacts of climate change. In 1969, NATO established the Committee on the Challenges of Modern Society (CCMS) partly to explore environmental threats.

The Clinching Evidence

By the 1970s, the scientific community had long understood the greenhouse effect. With increasing accuracy, they could model the relationship between atmospheric greenhouse gas concentrations and Earth’s temperature. They knew that CO2 concentrations were rising, and human activities were the likely cause. The only thing they lacked was conclusive empirical evidence that global temperature was rising. Some researchers had begun to notice an upward trend in temperature records, but global temperature is affected by many factors. The scientific method is an inherently conservative process. Scientists do not “confirm” their hypothesis, but instead rule out alternative and “null” hypotheses. Despite the strong evidence and logic for anthropogenic global warming, researchers needed to see the signal (warming) emerge clearly from the noise (natural variability). Given short-term temperature variability, that signal would take time to fully emerge. Meanwhile, as research continued, other alarming findings were published.

Scientists knew that CO2 was not the only greenhouse gases humans had put into the atmosphere. During the 1970s, research by James Lovelock revealed that levels of human-produced chlorofluorocarbons (CFCs) were rapidly rising. Used as refrigerants and propellants, CFCs were 10,000 times as effective as CO2 in trapping heat. Later, scientists discovered CFCs also destroy the ozone layer.

In 1979, at the behest of America’s National Academy of Sciences, MIT meteorologist Jule Charney convened a dozen leading climate scientists to study CO2 and climate. Using increasingly sophisticated climate models, the scientists refined estimates for the scale and speed of global warming. The Charney Report’s forward stated, “we now have incontrovertible evidence that the atmosphere is indeed changing and that we ourselves contribute to that change.” The report “estimate[d] the most probable global warming for a doubling of CO2 to be near 3°C.” Forty years later, newer observations and more powerful models have supported that original estimate. The researchers also forecasted CO2 levels would double by the mid-21st century. The report’s expected rate of warming agreed with numbers posited by John Sawyer of the UK’s Meteorological Office in a 1972 article in Nature. Sawyer projected warming of 0.6°C by 2000, which also proved remarkably accurate.

Shortly after the release of the Charney Report, many American politicians began to oppose environmental action. The Reagan Administration worked to roll back environmental regulations. Obeying a radical free-market ideology, they gutted the Environmental Protection Agency and ignored scientific concerns about acid rain, ozone depletion, and climate change.

However, the Clean Air and Clean Water Acts had already meaningfully improved air and water quality. Other nations had followed suit with similar anti-pollution policies. Interestingly, the success of these regulations made it easier for researchers to observe global warming trends. Many of the aerosol pollutants had the unintended effect of blocking incoming solar radiation. As a result, they had masked some of the emissions-driven greenhouse effect. As concentrations of these pollutants fell, a clear warming trend emerged. Scientists also corroborated ground temperature observations with satellite measurements. In addition, historical ice cores also provided independent evidence of the CO2-temperature relationship.

Sounding the Alarm

Despite his Midwestern reserve, James Hansen brought a stark message to Washington on a sweltering June day in 1988. “The evidence is pretty strong that the greenhouse effect is here.” Hansen led NASA’s Goddard Institute for Space Studies(GISS) and was one of the world’s foremost climate modelers. In his Congressional testimony, he explained that NASA was 99% certain that the observed temperature changes were not natural variation. The next day, the New York Times ran the headline “Global Warming Has Begun, Expert Tells Senate.” Hansen’s powerful testimony made it clear to politicians and the public where the scientists stood on climate change.

Also in 1988, the United Nations Environmental Programme (UNEP) and the World Meteorological Organization (WMO) created the Intergovernmental Panel on Climate Change (IPCC). The IPCC was created to study both the physical science of climate change and the numerous effects of the changes. To do that, the IPCC evaluates global research on climate change, adaptation, mitigation, and impacts. Thousands of leading scientists contribute to IPCC assessment reports as authors and reviewers. IPCC reports represent the largest scientific endeavor in human history and showcase the scientific process at its very best. The work is rigorous, interdisciplinary, and cutting edge.

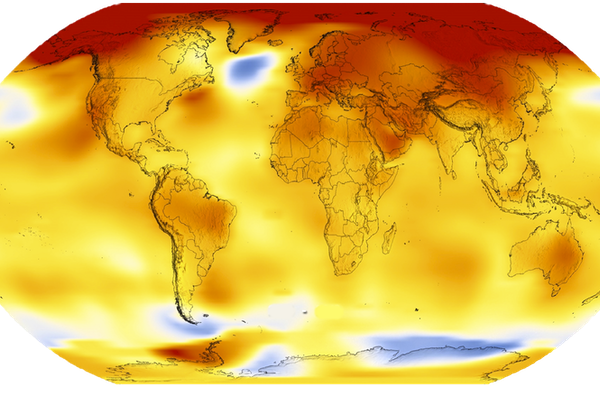

While the IPCC has contributed massively to our understanding of our changing world, its core message has remained largely unchanged for three decades. The First Assessment Report (FAR) in 1990 stated “emissions resulting from human activities are substantially increasing the atmospheric concentrations of the greenhouse gases.” Since then, the dangers have only grown closer and clearer with each report. New reports not only forecast hazards but describe the present chaos too. As the 2018 Special Report (SR15) explained: “we are already seeing the consequences of 1°C of global warming through more extreme weather, rising sea levels and diminishing Arctic sea ice, among other changes.”

Wasted Time

As this story has shown, climate science is not a new discipline and the scientific consensus on climate change is far older than many people think. Ironically, the history of climate denialism is far shorter. Indeed, a 1968 Stanford University study that reported “significant temperature changes are almost certain to occur by the year 2000 and these could bring about climatic changes,” was funded by the American Petroleum Institute. During the 1970s, fossil fuel companies conducted research demonstrating that CO2 emissions would likely increase global temperature. Only with political changes in the 1980s did climate denialism take off.

Not only is climate denialism relatively new, but it is uniquely American. No other Western nation has anywhere near America’s level of climate change skepticism. The epidemic of denialism has many causes. It is partly the result of a concerted effort by fossil fuel interests to confuse the American public on the science of climate change. It is partly due to free-market ideologues that refuse to accept a role for regulation. It is partly because of the media’s misguided notion of fairness and equal time for all views. It is partly due to the popular erosion of trust in experts. It is partly because the consequences of climate change are enormous and terrifying. Yet, you can no more reject anthropogenic climate change than you can reject gravity or magnetism. The laws of physics operate independently of human belief.

However, many who bear blame for our current predicament do not deny the science. For decades, global leaders have greeted dire forecasts with rounds of empty promises. James Hansen has been frustrated the lack of progress since his 1988 testimony. “All we’ve done is agree there’s a problem…we haven’t acknowledged what is required to solve it.” The costs of dealing with climate change are only increasing. Economic harms may run into the trillions. According to the IPCC’s SR15, to avoid some of climate change’s most devastating effects, global temperature rise should be kept to below 1.5°C above pre-industrial levels. That would likely require a reduction in emissions to half of 2010 levels by 2030, and to net-zero emissions by 2050. Had the world embarked on that path after Hansen’s spoke on Capitol Hill, it would have required annual emissions reductions of less than 2%. Now, according to the latest IPCC report, the same goal requires annual reductions of nearly 8%. 1.5°C appears to be slipping out of reach.

We have known about the causes of climate change for a long time. We have known about the impacts of climate change for a long time. And we have known about the solution to climate change for a long time. An academic review earlier this year demonstrated the impressive accuracy of climate models from the 1970s. This is no longer a scientific issue. While science can continue to forecast with greater geographic and temporal precision, the biggest unknown remains our action. What we choose today will shape the future.