History Meets Neuroscience

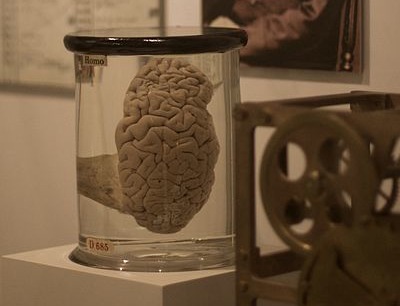

The brain of Charles Babbage, father of modern computing, on display at the Royal College of Surgeons in London. Credit: Wikipedia.

The facts are coming in and the truth isn’t a pretty one. It turns out that we’re addicts. All of us.

The facts are coming in and the truth isn’t a pretty one. It turns out that we’re addicts. All of us.

Okay, so most of us are not addicted to psychoactive drugs. What we are mildly addicted to, however, are dopamine, serotonin, and other neurochemicals manufactured right in the body. More accurately, we’re addicted to the behaviors that produce these chemicals. In the same way that your eyes detect photons and your skin registers changes in temperature, your neurochemical profile is constantly taking the pulse of the social climate. Do people like you? How's your standing? If things aren't going so well, you get a chemical nudge to spiff things up. So you eat a chocolate bar, log in to your Facebook account, pop in a CD of Christian rap, take a neck massage at mall spa…and wallow in the mild high you're getting off your own dopamine or endorphins.

In recent decades the study of the brain has become one of the most exciting fields of academic inquiry. Findings like this are spilling out of psychology and neurobiology and into the human sciences. Economists put subjects into fMRI machines to find out if people really are rational actors. Specialists in law use brain imaging to learn what people think about fairness and justice, and political scientists do the same to find out how people respond to political parties and political slogans. Urban sociologists go out and measure levels of stress hormones in zones of poverty. But what about history? On the face of it, the idea of applying neuroscience to the study of the past seems like a non-starter. We can't stick a long-dead person in an fMRI machine, let alone take saliva samples. Yet neuroscience has important implications for the study of history.

Think about sweetened chocolate, Facebook, Christian music, and mall massages. All of these are recent human inventions, elements of larger trends in the global economic system, communications, individualism, and consumer culture. Grand old historical themes, yes—and it is astonishing to realize that some of the great transformations of history were driven, in part, by their neurophysiological outcomes. So it is high time to figure out why. The moment has come to develop a neurohistory.

A neurohistory is a new kind of history, operating somewhere near the intersection of environmental history and global history. There are probably as many definitions of it as there are practitioners, but one important branch of the field centers on the form, distribution, and density of mood-altering mechanisms in historical societies. These can be foods and drugs like chocolate, peyote, alcohol, opium, and cocaine. But the list of mechanisms also includes things we do to ourselves or to others: ritual, dance, reading, gossip, sport, and, in a more negative way, poverty and abuse. Every human society, past and present, has its own unique complex of mood-altering mechanisms, in the same way that each society has distinctive family structures, religious forms, and other cultural attributes. Neurohistory is designed to explore those mechanisms and explain how and why they change over time.

Okay, so we already knew that the chemical properties of coffee help explain the astonishing coffee revolution of the eighteenth century. What a neuroscientific approach shows is that human practices such as prayer, pornography, and jogging are just like coffee. They also provide a mild dose of feel-good neurochemicals. For some people, they can even become mildly addictive or compulsive. Knowing this is essential to understanding how practices like this developed and spread—and, in some cases, how they became outclassed or shunted aside by competitors in the art of altering moods.

Clearly, a neurohistory is not going to produce your usual study of dates, presidents, and famous battles. It is doubtful that neuroscience can contribute much to these venerable historical perspectives. Instead, a neurohistory forms part of a trend underway for some decades now that has de-emphasized the histories of modern nations. Like social history, economic history, or environmental history, neurohistory takes note of sweeping patterns and aggregate trends rather than the behavior of individuals. It is a way of doing history, moreover, that is integrated into a deep historical perspective. We share many features of our nervous system with other primates, not to mention mammals. These potentially fruitful connections make no sense when history’s chronological framework extends only as far back as the agricultural revolution or the rise of cities. To make space for neuroscience, history itself needs to extend itself into the deep human past.

History is an inherently interdisciplinary practice. Curiously, the trend toward interdisciplinarity has rarely been extended to exchanges between humanities and the natural sciences. Some of the natural sciences figure prominently in introductory lectures and textbooks. You can't take a broad world history survey these days without borrowing from historical climatology and environmental studies. Key stories like that of the Columbian exchange, for example, rely explicitly on scientific conclusions. But historians are only just beginning to welcome intellectual exchanges with the other sciences, especially the sciences of the brain.

So what kinds of things could a neurohistory tell us about the past? At this point the only models available are hypothetical ones. Consider, by way of example, the history of the monasteries of medieval Europe.

The medieval Christian church had an uncanny sense for the practices that induce the body to produce feel-good neurotransmitters and sternly forbade most of them. The weight of these prohibitions fell lightly on the laity, most of whom, like teenagers today, found it easy to ignore the prohibitions. But evasion was more difficult for the cloistered population. Sure, it was possible to get around the usual prohibitions on wine or meat. But monks and nuns, in theory, were supposed to disrupt their sleep for nightly prayers and services; in addition, their sex lives and opportunities for amusement were curtailed.

Beyond that, you weren't even supposed to talk to people: idle gossip was (and remains) strictly forbidden in some monasteries. In the eleventh and twelfth centuries, they even developed a sign language so that monks at table could ask for bread and suchlike without using words. The language was kept barebones to ensure that signs couldn't be used for nefarious speech. The rare individuals who were particularly ascetic, eager to avoid as much human contact as possible, even walled themselves alone in cells.

To any psychologist, these practices will surely evoke the sensory deprivation experiments first conducted in the 1950s. Experimenters at the time were curious to know what happened when subjects were cut off from sensory experience. Within a day or two, subjects began to hallucinate. Horrifying studies conducted in the 1970s showed that rhesus macaque monkeys went psychotic and suffered permanent neurological damage while isolated in the aptly named "Pit of Despair."

At the root of this tendency is the fact that we are a social species. We rely on a daily dose of talk. As the psychologist Robin Dunbar has pointed out, ordinary chitchat is the human counterpart to grooming among primates. Both act as a kind of routine daily upkeep for social relations. Like grooming, chitchat helps us generate a pleasant dose of dopamine, serotonin, and oxytocin. It's something most of us are mildly addicted to. As with any addiction, deprivation can create stress. These basic lessons have not been lost on those who design prisons or wish to administer torture in a way that does not leave a mark.

So it turns out that those in charge of monasteries were conducting a kind of isolation experiment. Monks or nuns were never supposed to have sex. They spent a good deal of time alone, in silent prayer. They weren't even supposed to talk.

Why? Monasteries, emphatically, were not trying to torture anyone. What abbots felt is that social isolation made cloistered people more holy. But historical explanations, like scientific ones, typically operate on two different levels. With neuroscience, we can formulate a hypothesis that operates at a more distant level of causation. Monastic social deprivation, after all, was probably stressful, at least for those who practiced it. To alleviate the stress, members of the regular clergy became dependent on other practices to deliver the chemical goods, namely, song, liturgy, ritual, and prayer. Regular or repetitive practices often help people alleviate stress and re-establish their sense of well-being. Social deprivation, in short, could have induced monks and nuns to be drawn toward the very practices that were deemed to be holy.

A neurohistorical perspective, in short, might deepen our understanding of all forms of monasticism around the globe. It can also help us understand more recent phenomena. Europe in the century or so leading up to the French revolution is a period especially inviting for a study of mood-altering mechanisms. According to some historians, this was the century of dechristianization, the age when Europeans ceased going regularly to church services and withdrew from other forms of public and private devotion. They turned, instead, to a range of mood-altering mechanisms newly available for purchase on the market.

Some of these were products of global trade, as Europeans put together a package of substances long available in other societies, including caffeine, tobacco, chocolate, and opium. Others were home-grown, such as cheap gin, fortified wines and spirits, and nitrous oxide. This era also witnessed the spread of novels, newspapers, pornography, cafés, and salons.

The fascinating thing is that observers back then knew that something was going on. A German historian, August Ludwig Schlözer (d. 1809), observed that the discovery of spirits and the arrival of tobacco, sugar, coffee and tea in Europe had brought about revolutions just as great as the wars and battles of his era. Others expressed their dismay about the frenzy for reading that had taken hold of the population. Girls were warned not to meddle with romances, novels, and chocolate, all of which were thought to inflame the passions and induce masturbation. The avid taste for following politic gossip through the newspapers was likened to mania or a hot fever. The word "addiction" itself acquired its modern meaning in the late seventeenth century as observers scrambled to find a term that would encompass the changes they saw around them.

The story told by a neurohistorical perspective flows up to the present day. The growing density of market-based mood-altering mechanisms, for example, tracks the rise of capitalism and consumer culture. Look at the twentieth century, with television and horror movies, sports and tourism, recorded music, inexpensive psychoactive drugs, video games, evangelical church services, and the whole gamut of addictive things now available on the Internet. The impact has been enormous. Among other things, the great crisis of education is boredom, precisely because so many students have become addicted to things that alter their body-states, their synapses grown numb to the excitement of learning.

Even a visit to the mall has become mildly addictive. Shop, eat, gossip, take in a movie: a whole cocktail of neurotransmitters and hormones is everywhere on sale. The architecture of malls has even been designed to disorient the shopper and induce a mild panic that opens the purse strings. On a more sinister level, panic and stress are also generated by poverty, genocide, surveillance, and terror in all its dimensions. Modern practices and institutions bend and twist the brain in all kinds of ways.

So did those of the past, of course. Neurohistory suggests that culture and power have always been mediated through mood-altering mechanisms, and that these mechanisms are unique to the cultures in which they are embedded. Significantly, they were not the product of anyone’s design. They evolved instead against the steady backdrop of human neurophysiology, varying from one society to the next according to the material culture and technology particular to each. For my part, I think it is interesting to ask ourselves whether mood-altering mechanisms, like atmospheric carbon dioxide, particulate pollution, and environmental toxins, have been steadily growing in density since the industrial revolution. If so, then a neurohistory of modernity is like any environmental history that focuses on the dangerous byproducts of capitalism, understanding that capitalism itself needs to be understood in light of neurobiology. Such a perspective brings a new urgency to many of the questions that transfix us today.